Publications

publications by categories in reversed chronological order. generated by jekyll-scholar.

2025

- WSDM

FedGF: Enhancing Structural Knowledge via Graph Factorization for Federated Graph LearningPengyang Zhou, Chaochao Chen*, Weiming Liu, Xinting Liao, Fengyuan Yu, and 5 more authorsIn Proceedings of the 38th International Conference on Neural Information Processing Systems , Mar 2025

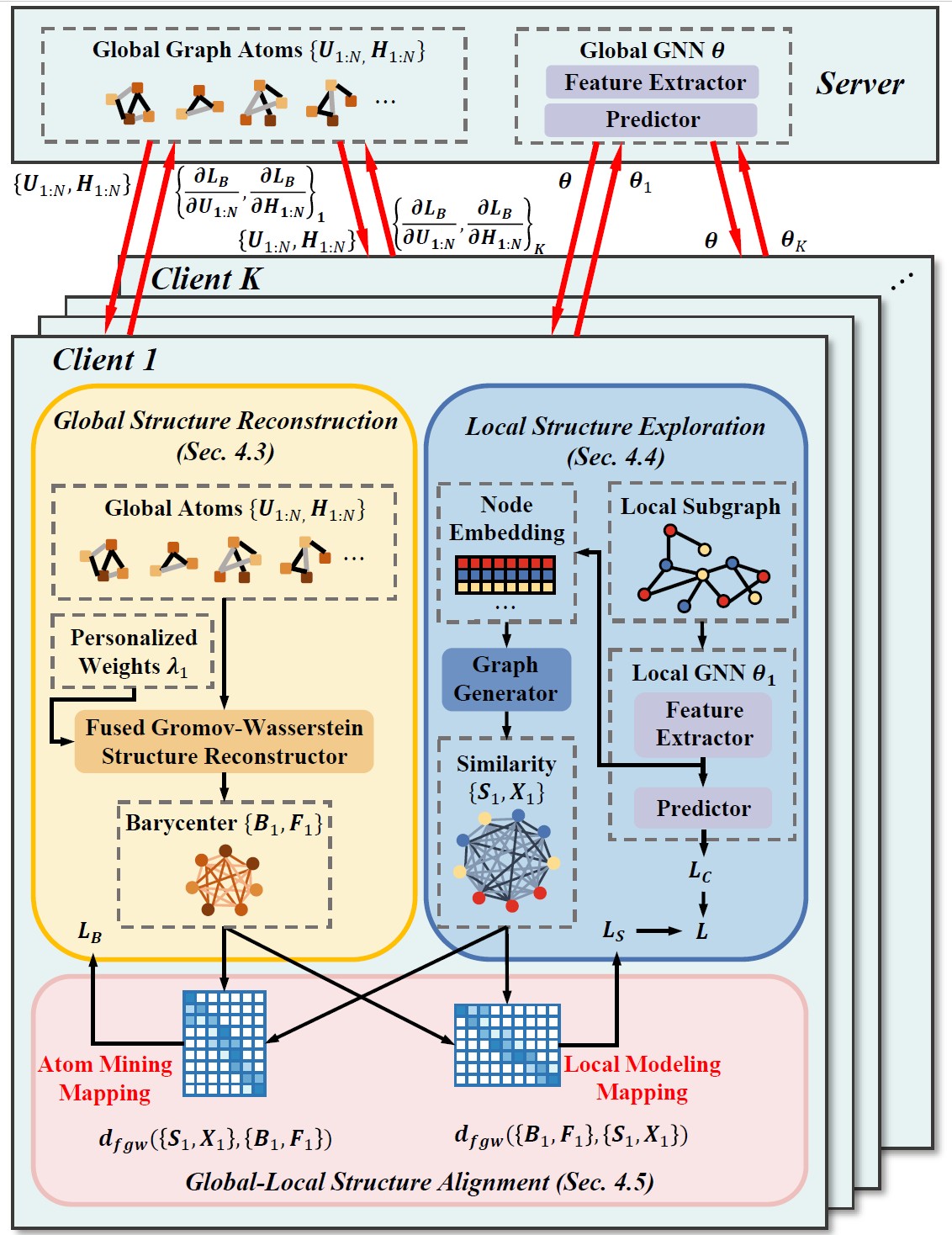

FedGF: Enhancing Structural Knowledge via Graph Factorization for Federated Graph LearningPengyang Zhou, Chaochao Chen*, Weiming Liu, Xinting Liao, Fengyuan Yu, and 5 more authorsIn Proceedings of the 38th International Conference on Neural Information Processing Systems , Mar 2025Federated graph learning involves training graph neural networks distributively on local graphs and aggregating model parameters in a central server. However, existing methods fail to effectively capture and leverage the inherent global structures, hindering local structural modeling. To address this, we propose Federated Graph Factorization (FedGF), which enhances structural knowledge via privacy-preserving graph factorization. Specifically, FedGF includes three modules, i.e., global structure reconstruction (GSR), local structure exploration (LSE), and global-local structure alignment (GLSA). Firstly, GSR factorizes client graphs into a series of learnable graph atoms and conducts reconstruction to capture the globally shared structure. Then, LSE explores the local structure, mining potential but unrevealed connections within client subgraphs. GLSA further aligns the global and local structure to alternatively refine the graph atoms and GNN model, enhancing the overall structural modeling. Extensive experiments on six datasets consistently validate the effectiveness of modelname.

@inproceedings{zhou2025fedgf, title = {FedGF: Enhancing Structural Knowledge via Graph Factorization for Federated Graph Learning}, author = {Zhou, Pengyang and Chen, Chaochao and Liu, Weiming and Liao, Xinting and Yu, Fengyuan and Fu, Zhihui and Lou, Xingyu and Wen, Wu and Zheng, Xiaolin and Wang, Jun}, booktitle = {Proceedings of the 38th International Conference on Neural Information Processing Systems}, volume = {37}, pages = {448--456}, year = {2025}, month = mar, doi = {10.1145/3701551.3703493}, url = {https://doi.org/10.1145/3701551.3703493}, } - WWW

Plug and Play: Enabling Pluggable Attribute Unlearning in Recommender SystemsXiaoHua Feng, Yuyuan Li, Fengyuan Yu, Li Zhang, Chaochao Chen, and 1 more authorIn Proceedings of the ACM on Web Conference 2025 , Apr 2025

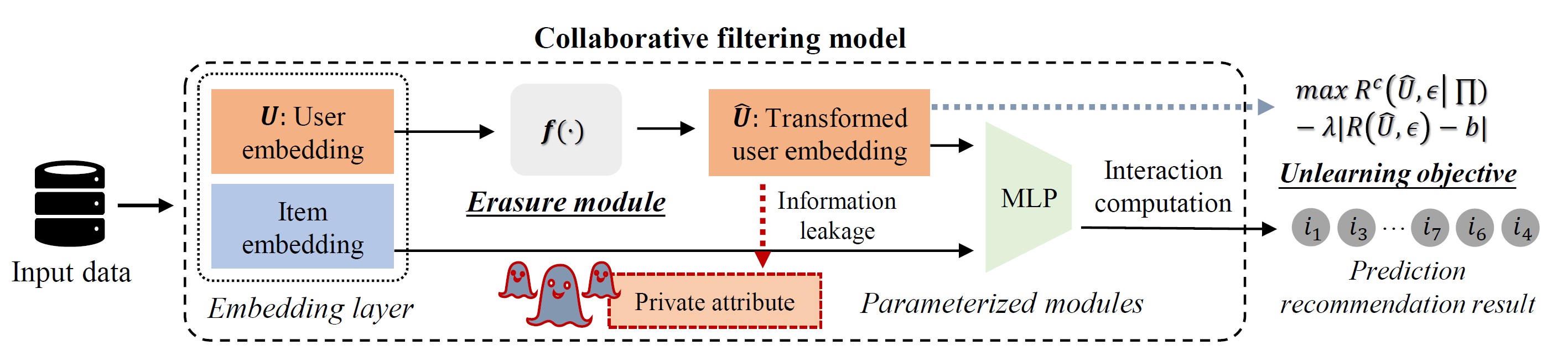

Plug and Play: Enabling Pluggable Attribute Unlearning in Recommender SystemsXiaoHua Feng, Yuyuan Li, Fengyuan Yu, Li Zhang, Chaochao Chen, and 1 more authorIn Proceedings of the ACM on Web Conference 2025 , Apr 2025With the escalating privacy concerns in recommender systems, attribute unlearning has drawn widespread attention as an effective approach against attribute inference attacks. This approach focuses on unlearning users’ privacy attributes to reduce the performance of attackers while preserving the overall effectiveness of recommendation. Current research attempts to achieve attribute unlearning through adversarial training and distribution alignment in the statistic setting. However, these methods often struggle in dynamic real-world environments, particularly when considering scenarios where unlearning requests are frequently updated. In this paper, we first identify three main challenges of current methods in dynamic environments, i.e., irreversible operation, low efficiency, and unsatisfied recommendation preservation. To overcome these challenges, we propose a Pluggable Attribute Unlearning framework, PAU. Upon receiving an unlearning request, PAU plugs an additional erasure module into the original model to achieve unlearning. This module can perform a reverse operation if the request is later withdrawn. To enhance the efficiency of unlearning, we introduce rate distortion theory and reduce the attack performance by maximizing the encoded bits required for users’ embedding within the same class of the unlearned attribute and minimizing those for different classes, which eliminates the need to calculate the centroid distribution for alignment. We further preserve recommendation performance by constraining the compactness of the user embedding space around a reasonable flood level. Extensive experiments conducted on four real-world datasets and three mainstream recommendation models demonstrate the effectiveness of our proposed framework.

@inproceedings{feng2025plug, title = {Plug and Play: Enabling Pluggable Attribute Unlearning in Recommender Systems}, author = {Feng, XiaoHua and Li, Yuyuan and Yu, Fengyuan and Zhang, Li and Chen, Chaochao and Zheng, Xiaolin}, booktitle = {Proceedings of the ACM on Web Conference 2025}, pages = {2689--2699}, year = {2025}, month = apr, doi = {10.1145/3696410.3714671}, url = {https://doi.org/10.1145/3696410.3714671}, } - Survey

A Survey on Generative Model Unlearning: Fundamentals, Taxonomy, Evaluation, and Future DirectionXiaohua Feng, Jiaming Zhang, Fengyuan Yu, Chengye Wang, Li Zhang, and 4 more authorsarXiv preprint arXiv:2507.19894, Jul 2025

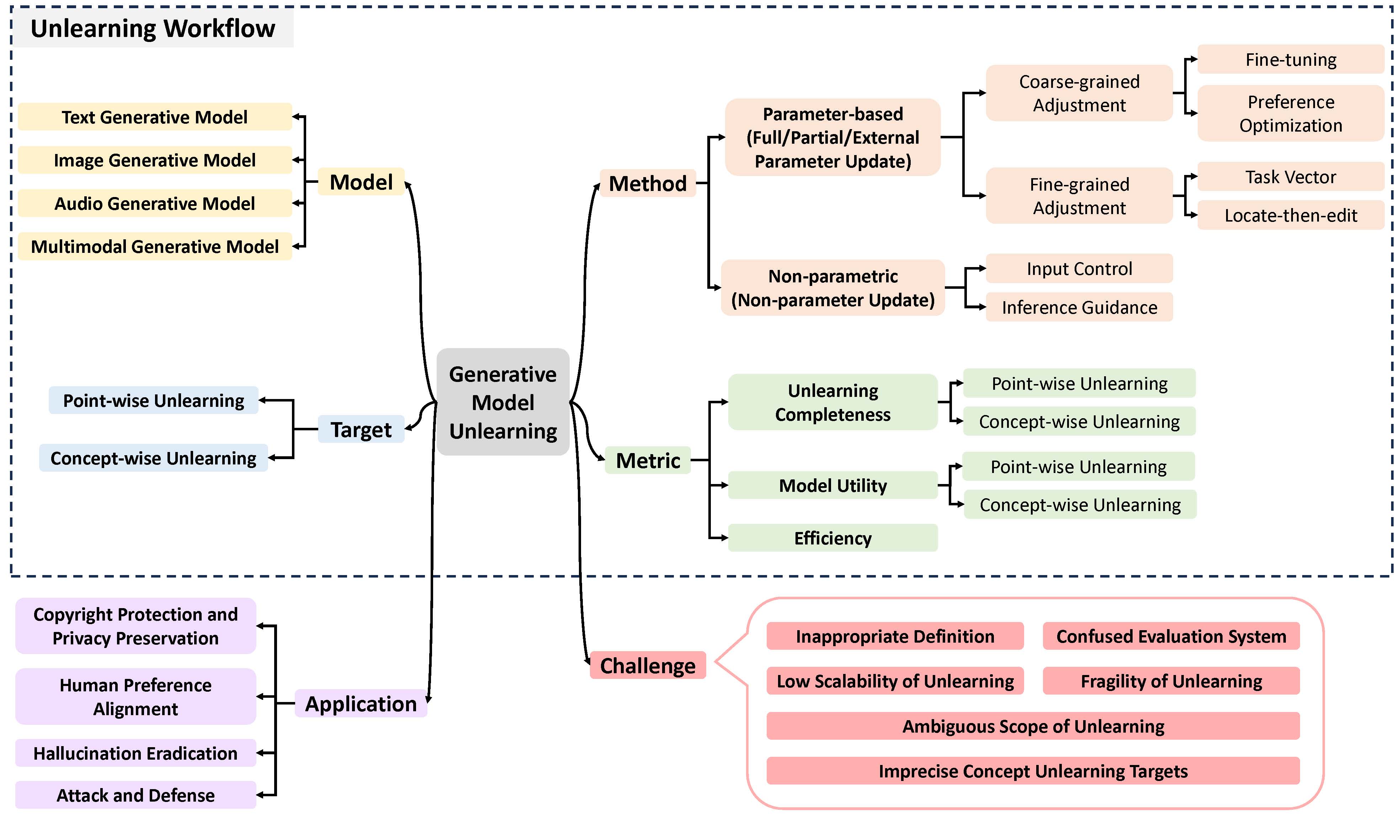

A Survey on Generative Model Unlearning: Fundamentals, Taxonomy, Evaluation, and Future DirectionXiaohua Feng, Jiaming Zhang, Fengyuan Yu, Chengye Wang, Li Zhang, and 4 more authorsarXiv preprint arXiv:2507.19894, Jul 2025With the rapid advancement of generative models, associated privacy concerns have attracted growing attention. To address this, researchers have begun adapting machine unlearning techniques from traditional classification models to generative settings. Although notable progress has been made in this area, a unified framework for systematically organizing and integrating existing work is still lacking. The substantial differences among current studies in terms of unlearning objectives and evaluation protocols hinder the objective and fair comparison of various approaches. While some studies focus on specific types of generative models, they often overlook the commonalities and systematic characteristics inherent in Generative Model Unlearning (GenMU). To bridge this gap, we provide a comprehensive review of current research on GenMU and propose a unified analytical framework for categorizing unlearning objectives, methodological strategies, and evaluation metrics. In addition, we explore the connections between GenMU and related techniques, including model editing, reinforcement learning from human feedback, and controllable generation. We further highlight the potential practical value of unlearning techniques in real-world applications. Finally, we identify key challenges and outline future research directions aimed at laying a solid foundation for further advancements in this field. We consistently maintain the related open-source materials at this https URL.

@article{Feng2025GenMU, title = {A Survey on Generative Model Unlearning: Fundamentals, Taxonomy, Evaluation, and Future Direction}, author = {Feng, Xiaohua and Zhang, Jiaming and Yu, Fengyuan and Wang, Chengye and Zhang, Li and Li, Kaixiang and Li, Yuyuan and Chen, Chaochao and Yin, Jianwei}, journal = {arXiv preprint arXiv:2507.19894}, year = {2025}, month = jul, doi = {10.48550/arXiv.2507.19894}, url = {https://arxiv.org/abs/2507.19894}, eprint = {2507.19894}, archiveprefix = {arXiv}, primaryclass = {cs.LG}, }

2024

- CVPR

Rethinking the Representation in Federated Unsupervised Learning with Non-IID DataXinting Liao, Weiming Liu, Chaochao Chen*, Pengyang Zhou, Fengyuan Yu, and 5 more authorsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2024

Rethinking the Representation in Federated Unsupervised Learning with Non-IID DataXinting Liao, Weiming Liu, Chaochao Chen*, Pengyang Zhou, Fengyuan Yu, and 5 more authorsIn Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) , Jun 2024Federated learning achieves effective performance in modeling decentralized data. In practice client data are not well-labeled which makes it potential for federated unsupervised learning (FUSL) with non-IID data. However the performance of existing FUSL methods suffers from insufficient representations i.e. (1) representation collapse entanglement among local and global models and (2) inconsistent representation spaces among local models. The former indicates that representation collapse in local model will subsequently impact the global model and other local models. The latter means that clients model data representation with inconsistent parameters due to the deficiency of supervision signals. In this work we propose FedU2 which enhances generating uniform and unified representation in FUSL with non-IID data. Specifically FedU2 consists of flexible uniform regularizer (FUR) and efficient unified aggregator (EUA). FUR in each client avoids representation collapse via dispersing samples uniformly and EUA in server promotes unified representation by constraining consistent client model updating. To extensively validate the performance of FedU2 we conduct both cross-device and cross-silo evaluation experiments on two benchmark datasets i.e. CIFAR10 and CIFAR100.

@inproceedings{liao2024rethinking, title = {Rethinking the Representation in Federated Unsupervised Learning with Non-IID Data}, author = {Liao, Xinting and Liu, Weiming and Chen, Chaochao and Zhou, Pengyang and Yu, Fengyuan and Zhu, Huabin and Yao, Binhui and Wang, Tao and Zheng, Xiaolin and Tan, Yanchao}, booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}, pages = {22841--22850}, year = {2024}, month = jun, doi = {10.1109/CVPR52733.2024.02155}, url = {https://openaccess.thecvf.com/content/CVPR2024/html/Liao_Rethinking_the_Representation_in_Federated_Unsupervised_Learning_with_Non-IID_Data_CVPR_2024_paper.html}, } - NeurIPS

FOOGD: Federated Collaboration for Both Out-of-Distribution Generalization and DetectionXinting Liao, Weiming Liu, Pengyang Zhou, Fengyuan Yu, Jiahe Xu, and 4 more authorsIn Proceedings of the 38th International Conference on Neural Information Processing Systems , Dec 2024

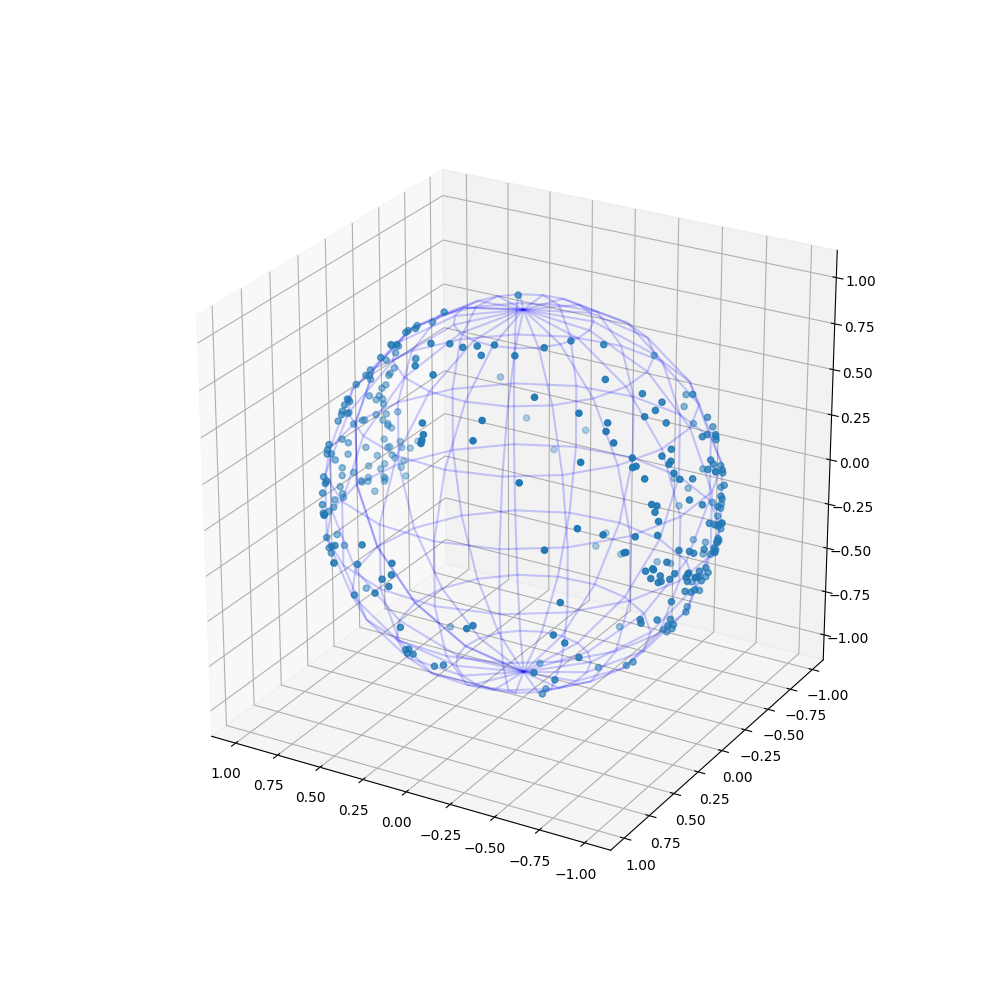

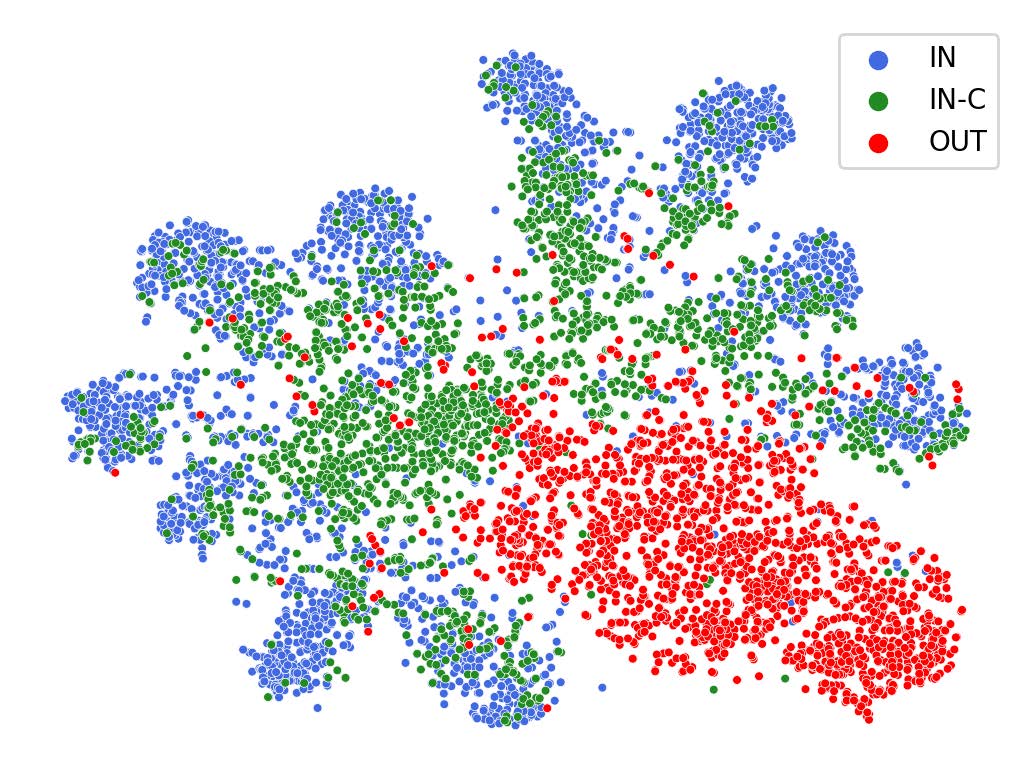

FOOGD: Federated Collaboration for Both Out-of-Distribution Generalization and DetectionXinting Liao, Weiming Liu, Pengyang Zhou, Fengyuan Yu, Jiahe Xu, and 4 more authorsIn Proceedings of the 38th International Conference on Neural Information Processing Systems , Dec 2024Federated learning (FL) is a promising machine learning paradigm that collaborates with client models to capture global knowledge. However, deploying FL models in real-world scenarios remains unreliable due to the coexistence of in-distribution data and unexpected out-of-distribution (OOD) data, such as covariate-shift and semantic-shift data. Current FL researches typically address either covariate-shift data through OOD generalization or semantic-shift data via OOD detection, overlooking the simultaneous occurrence of various OOD shifts. In this work, we propose FOOGD, a method that estimates the probability density of each client and obtains reliable global distribution as guidance for the subsequent FL process. Firstly, SM3D in FOOGD estimates score model for arbitrary distributions without prior constraints, and detects semantic-shift data powerfully. Then SAG in FOOGD provides invariant yet diverse knowledge for both local covariate-shift generalization and client performance generalization. In empirical validations, FOOGD significantly enjoys three main advantages: (1) reliably estimating non-normalized decentralized distributions, (2) detecting semantic shift data via score values, and (3) generalizing to covariate-shift data by regularizing feature extractor. The prejoct is open in https://github.com/XeniaLLL/FOOGD-main.git.

@inproceedings{liao2024foogd, title = {FOOGD: Federated Collaboration for Both Out-of-Distribution Generalization and Detection}, author = {Liao, Xinting and Liu, Weiming and Zhou, Pengyang and Yu, Fengyuan and Xu, Jiahe and Wang, Jun and Wang, Wenjie and Chen, Chaochao and Zheng, Xiaolin}, booktitle = {Proceedings of the 38th International Conference on Neural Information Processing Systems}, volume = {37}, pages = {132908--132945}, year = {2024}, month = dec, doi = {10.48550/arXiv.2410.11397}, url = {https://proceedings.neurips.cc/paper_files/paper/2024/hash/efd1e27afcb94addd03b9e14c8d9f78f-Abstract-Conference.html}, } - IJMLC

Recommendations for Inactive Users: a Cross Domain Approach with Graph Neural NetworksJun Zhou, Ziqi Liu, Meijuan Tan, Xiangyu Meng, Xiaocheng Cheng, and 5 more authorsInternational Journal of Machine Learning and Cybernetics , Nov 2024

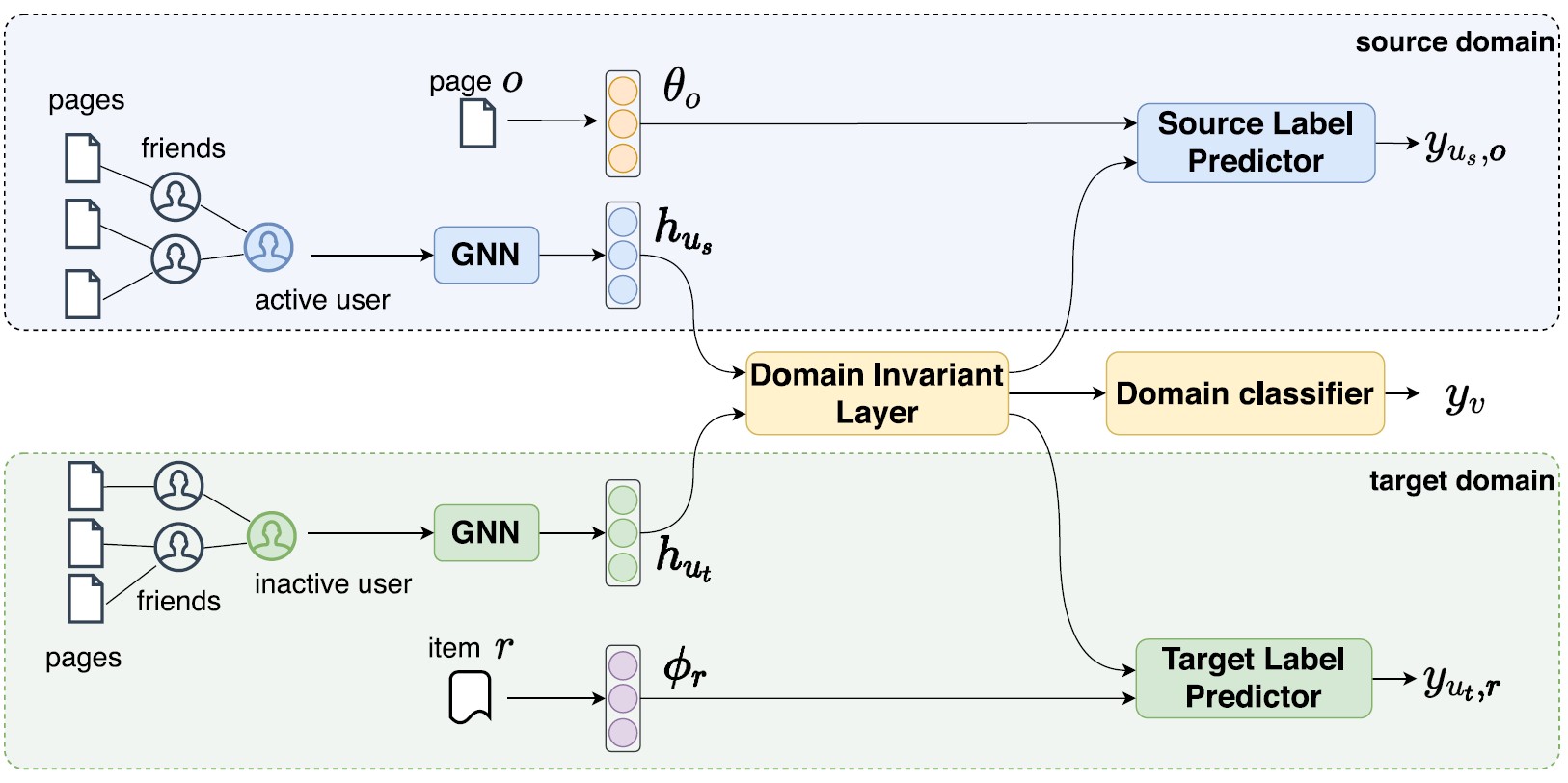

Recommendations for Inactive Users: a Cross Domain Approach with Graph Neural NetworksJun Zhou, Ziqi Liu, Meijuan Tan, Xiangyu Meng, Xiaocheng Cheng, and 5 more authorsInternational Journal of Machine Learning and Cybernetics , Nov 2024Understanding inactive users and satisfying inactive users via recommendation are the keys to user growth and engagement for many Internet companies. However, learning inactive users’ representations and their preferences is still challenging because the features available are missing and the positive responses or labels are insufficient. In this paper, we propose a cross domain learning approach to exclusively recommend customized items to inactive users by leveraging the knowledge of active users. Particularly, based on the observations that users’ browsing behaviors, i.e. page browsing in an app, are correlated with their social networks’ browsing behaviors, we represent users, no matter active or inactive users, by their friends’ browsing behaviors based on a graph neural network (GNN) layer atop of a heterogeneous graph defined on social networks (user-user friendships) and browsing behaviors (user-page clicks). We jointly optimize the learning tasks of active users in source domain and inactive users in target domain based on the domain invariant features extracted from the embedding of our GNN layer, where the domain invariant features that are learned to benefit both tasks on active/inactive users, and are indiscriminate with respect to the shift between the domains. We describe our cross domain graph neural networks (CD-GNN) together with our learning and serving system, and show that our approach significantly improves the click-through rate of inactive users in real-world environment at Alipay by 28.55%. We also conduct extensive experiments to show that our approach can well capture the preference of inactive users, and significantly outperforms state-of-the-art approaches in terms of RMSE by 5% especially when we decrease the available features and samples of inactive users in target domain using public data.

@article{zhou2024, title = {Recommendations for Inactive Users: a Cross Domain Approach with Graph Neural Networks}, author = {Zhou, Jun and Liu, Ziqi and Tan, Meijuan and Meng, Xiangyu and Cheng, Xiaocheng and Wei, Jianping and Zhang, Zhiqiang and Yu, Fengyuan and Chen, Chaochao and Yin, Jianwei}, journal = {International Journal of Machine Learning and Cybernetics}, year = {2024}, month = nov, pages = {1--15}, doi = {10.1007/s13042-024-02423-w}, url = {https://doi.org/10.1007/s13042-024-02423-w}, }

2023

- IEEE TDSC

ERNN: Error-resilient RNN for Encrypted Traffic Detection Towards Network-Induced PhenomenaZiming Zhao, Zhaoxuan Li, Jialun Jiang, Fengyuan Yu, Fan Zhang*, and 4 more authorsIEEE Transactions on Dependable and Secure Computing , Feb 2023

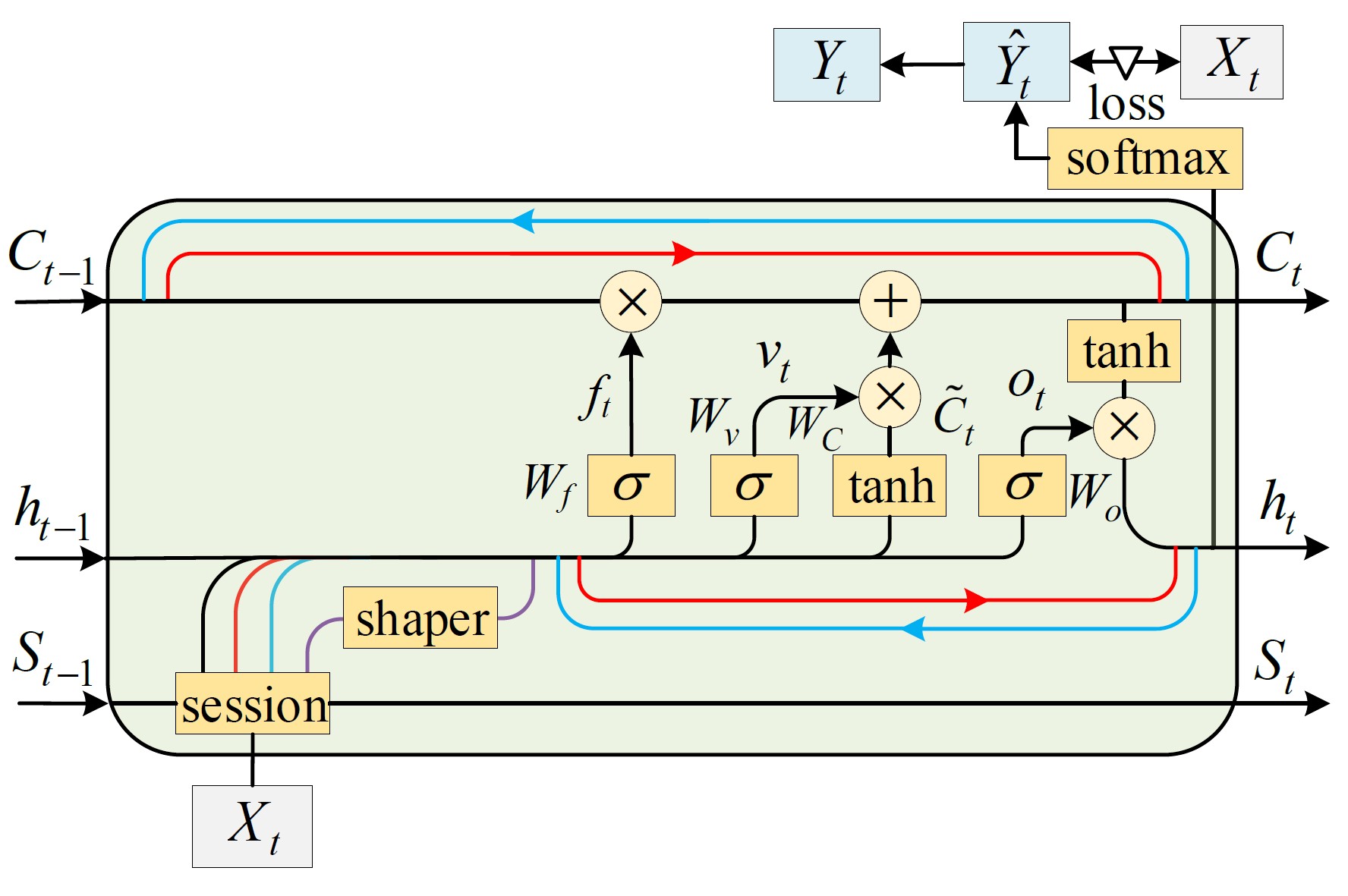

ERNN: Error-resilient RNN for Encrypted Traffic Detection Towards Network-Induced PhenomenaZiming Zhao, Zhaoxuan Li, Jialun Jiang, Fengyuan Yu, Fan Zhang*, and 4 more authorsIEEE Transactions on Dependable and Secure Computing , Feb 2023Traffic detection systems based on machine learning have been proposed to defend against cybersecurity threats, such as intrusion attacks and malware. However, they did not take the impact of network-induced phenomena into consideration, such as packet loss, retransmission, and out-of-order. These phenomena will introduce additional misclassifications in the real world. In this paper, we present \sf ERNN, a robust and end-to-end RNN model that is specially designed against network-induced phenomena. As its core, \sf ERNN is designed with a novel gating unit named as session gate that includes: (i) four types of actions to simulate common network-induced phenomena during model training; and (ii) the Mealy machine to update states of session gate that adjusts the probability distribution of network-induced phenomena. Taken together, \sf ERNN advances state-of-the-art by realizing the model robustness for network-induced phenomena in an error-resilient manner. We implement \sf ERNN and evaluate it extensively on both intrusion detection and malware detection systems. By practical evaluation with dynamic bandwidth utilization and different network topologies, we demonstrate that \sf ERNN can still identify 98.63% of encrypted intrusion traffic when facing about 16% abnormal packet sequences on a 10 Gbps dataplane. Similarly, \sf ERNN can still robustly identify more than 97% of the encrypted malware traffic in multi-user concurrency scenarios. \sf ERNN can realize \sim4% accuracy more than SOTA methods. Based on the Integrated Gradients method, we interpret the gating mechanism can reduce the dependencies on local packets (termed dependency dispersion). Moreover, we demonstrate that \sf ERNN possesses superior stability and scalability in terms of parameter settings and feature selection.

@article{zhao2023ernn, title = {ERNN: Error-resilient RNN for Encrypted Traffic Detection Towards Network-Induced Phenomena}, author = {Zhao, Ziming and Li, Zhaoxuan and Jiang, Jialun and Yu, Fengyuan and Zhang, Fan and Xu, Congyuan and Zhao, Xinjie and Zhang, Rui and Guo, Shize}, journal = {IEEE Transactions on Dependable and Secure Computing}, volume = {}, number = {}, pages = {1--18}, year = {2023}, month = feb, publisher = {IEEE}, doi = {10.1109/TDSC.2023.3242134}, url = {https://ieeexplore.ieee.org/abstract/document/10036003}, }